This is a guest post from Laura Breen, a PhD student with research interests in international law, global governance, and emerging technology; Gaea Morales, a PhD student with research interests in environmental security and global-local linkages; Joseph Saraceno, a PhD candidate with research interests in political institutions and quantitative methodology; and Kayla Wolf, a PhD student with research interests in gender, politics, and political socialization. All are completing the PhD program in Political Science and International Relations at the University of Southern California.

It’s not news that political science has a gender problem. This blog has multiple entries on the gender gap, and anyone who spends 20 minutes on academic twitter or at a grad student happy hour is likely to encounter firsthand accounts of the effects of the gender gap. If firsthand accounts aren’t enough, there’s plenty of excellent peer reviewed reviewed work showing that the phenomenon exists, including Maliniak et al.’s finding that women are “systematically cited less than men” in IR, Dawn Langan Teele and Kathleen Thelen’s 2017 article that found a comparable gap even when accounting for women’s share of the profession.

A common talking point about the gender gap in political science is that things have been getting better, and the gender balance in publications will steadily even out over time as gender disparities in society are minimized and the number of women in the profession grows. In short: we are approaching parity, all it takes is time. However, for many scholars, and particularly women in political science, this narrative of progress conflicts with lived experience and observations of who (and what) we see published in top journals.

The Data

As part of a simple data visualization project gone off the rails, we gathered four years of data to see whether the optimistic belief that things are really getting better bears out empirically. We were particularly interested in the years since the last comprehensive examination of gendered political science publication rates.

Building on the dataset Teele and Thelen created to examine gendered publication rates across ten journals from 1999-2015, we hand coded author gender and order for all articles across the original journals examined in their article for the years 2016 to 2019. These included Journal of Politics, American Political Science Review, Comparative Politics (CP), International Organization, Comparative Political Studies, Journal of Conflict Research (JCR), Perspectives on Politics, Political Theory, and World Politics. To collect author gender for the years 2016 onwards, we coded gender based on pronouns used in personal website and department biographies.

Through this process, we coded approximately 2500 additional entries, resulting in an updated dataset of 10,410 articles spanning 20 years of publication data from 1999 through 2019. For the purpose of our analysis, we visualized a subset of research articles only, including research notes.

So, how are things going?

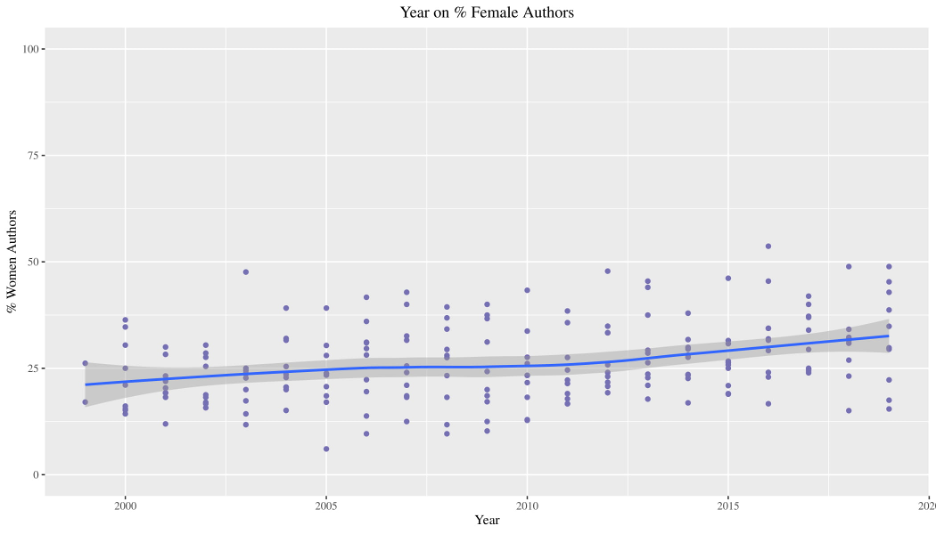

In a nutshell: not great, but I think we knew that already. Let’s start with some basic summary statistics. Across all 199 journal-year observations, the mean number of women published per journal is 26.4%, an average of 16 per year. The minimum percentage of women authors recorded was 6% at JCR in 2004, and the maximum recorded was 53% at CP in 2016. Incidentally, CP in 2016 was also the only observation in which a journal reached or passed the 50% mark for percent of women authors published. Looking at the twenty-year trend, each additional increase in year between 1999 and 2019 is associated with a .34% increase in women’s authorship.

Percentage of Women Authors by Journal, 1999-2019

Gender and Co-Authorship

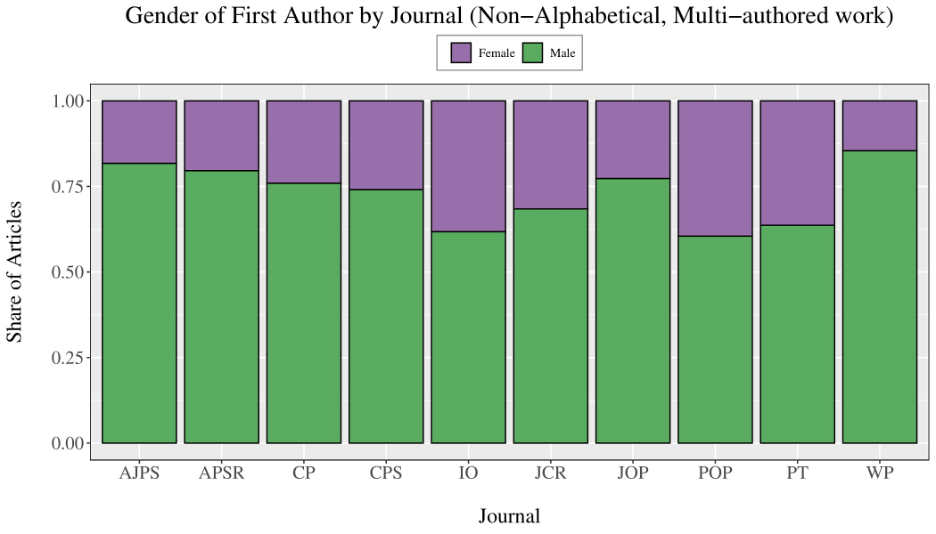

We also looked at the percentage of women first authors across all journals, and found that first authors were much more likely to be men than women. This holds even when only taking into account articles with multiple authors not listed in alphabetical order.

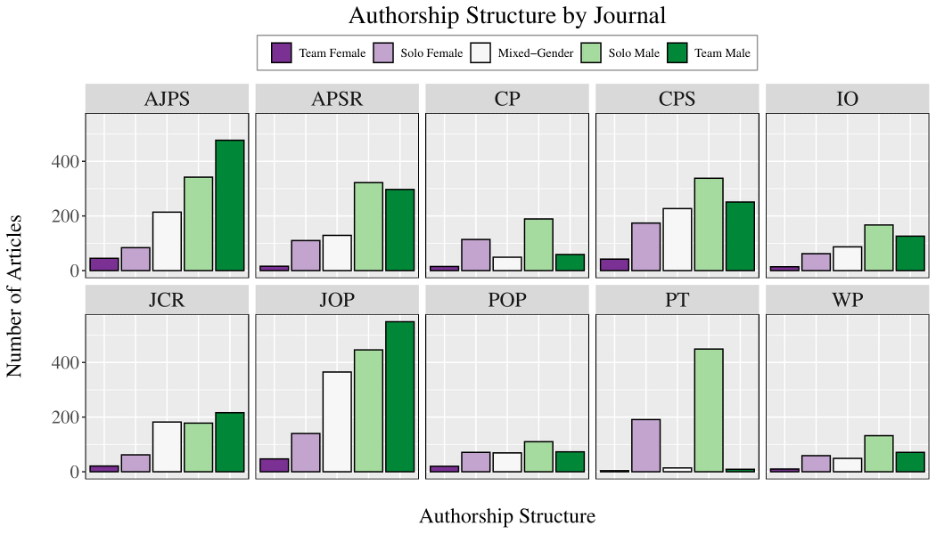

Likewise, a survey of authorship structure demonstrated that solo male authors or all male authorship teams dominated across all journals, with mixed gendered authorship teams and all female authorship teams relatively less common. This reflects the observation that women are not equally benefitting from the co-authorship revolution, as has been highlighted by Paul Djupe, Amy Smith, and Anand Sokhey. We did come across an article with 13 authors and no women that we felt was particularly emblematic of this trend.

Feeding the Pipeline

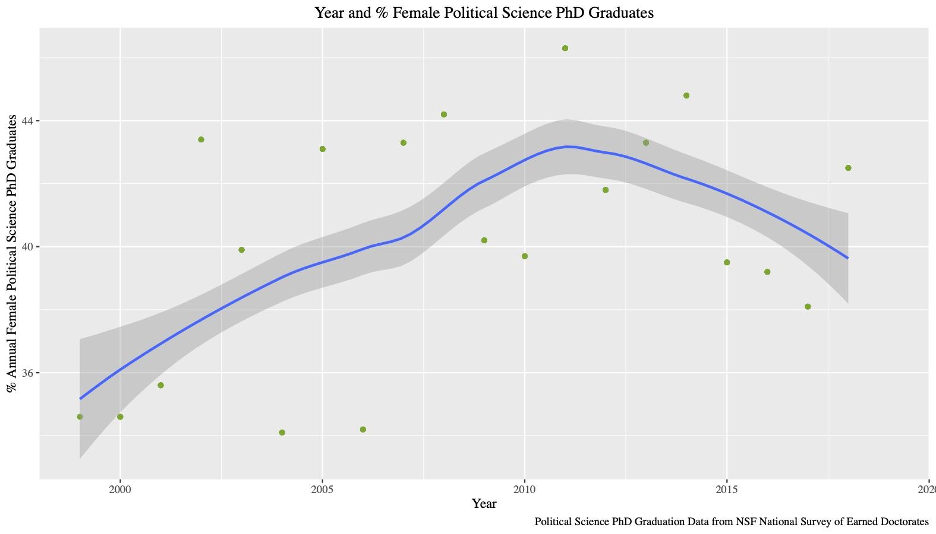

Finally, in an attempt to get a measure of changes in women’s share of the profession over time, in the absence of other open source options we reference the NSF’s National Survey of Earned Doctorates data to observe the percentage of all Political Science PhDs per year earned by women. While we found that the percentage of PhD recipients was a bad proxy for women’s share of the profession, we did find that over the same time period as our journal analysis, percentages of women receiving political science PhDs rose steadily through 2010 before beginning a downward trend through 2018, the last year of available data.

What does this mean?

So, what does this mean? First, looking at 20 years of political science publication data does show a small, statistically significant increase for women’s authorship over time. However, the magnitude of the effect of time alone is minor in terms of gains in parity. If we desire a more balanced discipline, simply waiting for increased gender equality in society in hopes that it will trickle down into political science is not going to result in the significant changes we want.

Why is this happening?

The good news is there is a great deal of excellent scholarship about potential causes of this disparity on both the supply and demand side of the publishing process in political science. On the supply side of the problem is a submission gap, or disproportionate submission of articles to top journals by men versus women. Djupe, Smith, and Sokhey, also building on the work of Teele and Thelen, found evidence that submission rates play a significant role in the gender publication gap.

Compelling research by Ellen Key and Jane Sumner has suggested that the submission gap may not be driven by different levels of risk aversion between men and women, but by the fact that research areas with higher amounts of women scholars are also seen as niche, and more appropriate for specialist journals. This in turn raises the question of whether certain topics are more highly valued than others, and given more space, than other topics seen as “women’s political science.” These explanations don’t even touch on matters related to the amount of household and emotional labor that often falls to women, eating into valuable research time. This final factor has gotten more attention recently, as it seems likely to lead to a significant drop in submission of women authored research during and following the Covid-19 pandemic.

What do we do about this?

A treasure trove of work by scholars that points to potential ways to address the gender publishing gap. First, factors such as increasing access to affordable and safe childcare, better national parental leave policy, and shifting towards more even distribution of tasks in the home are all important building blocks of getting more women’s research published. Amanda Murdie has written on the need to establish a new norm against the gender citation gap, and two of her recommendations from that framework apply just as well to addressing the gender publication gap: active and passive socialization, and shaming, calling out instances in which violations of a norm against the gap is violated.

Other than individually encouraging early career scholars to consider submitting to top journals, there is also a role for editors as well. Djupe, Smith and Sohkey suggest that in order to bridge the submission gap, editors at top journals should take concrete actions to limit the potential costliness of women aiming high with their submissions by attempting to decrease review process times. If part of the issue is the perception of gendered research, we as a discipline (and especially editors) should actively consider which types of research we consider to be valuable and worthy of a spot in a generalist journal, and what led to the construction of those beliefs in the first place.

Finally, we want to talk about who gets counted when we do research on the state of the discipline. We were able to reliably code author gender from website pronouns in a way that can’t be done with race, ethnicity, country of origin, and other factors that intersect with gender and publication rates.This analysis therefore leaves out those who are least represented in the profession, including people of color, and women of color in particular. A survey of gendered publication rates barely scratches the surface of who is and isn’t being heard in political science, and how institutionalized racism both inside and outside the academy affects who and what gets published. We should give more funding, value, and attention to examining race and other factors playing a role in publication and career advancement that cannot be quickly or cheaply surveyed like we did here with gender. That work is desperately needed.

Likewise, we found that low cost computational methods were systematically less accurate for gathering data involving non-western names. We originally considered coding the last four years of publications using the genderize API, which uses social media data to predict gender by first name, and is commonly used in work requiring gender identification across a large dataset. However we found that even in cases when genderize predicted a high level of certainty, a significant portion of non-westernized names in our dataset were assigned the incorrect gender. There’s a good reason tools like genderize are used for this type of work; in many cases they are the only feasible option, giving us data where we’d otherwise have none. However, surveying the field with methods that are systematically more likely to miscount or misrepresent those who have already been marginalized in the discipline has consequences. Alternatives require that significantly more time or resources be devoted to meta scholarship. A team of four PhD students trying to distract themselves from the apocalypse by hand coding a few years of data is certainly not a universal fix.

Joshua Busby is a Professor in the LBJ School of Public Affairs at the University of Texas-Austin. From 2021-2023, he served as a Senior Advisor for Climate at the U.S. Department of Defense. His most recent book is States and Nature: The Effects of Climate Change on Security (Cambridge, 2023). He is also the author of Moral Movements and Foreign Policy (Cambridge, 2010) and the co-author, with Ethan Kapstein, of AIDS Drugs for All: Social Movements and Market Transformations (Cambridge, 2013). His main research interests include transnational advocacy and social movements, international security and climate change, global public health and HIV/ AIDS, energy and environmental policy, and U.S. foreign policy.

0 Comments