One of the more specious criticisms of the “stopkillerrobots” campaign is that it is using sensationalist language and imagery to whip up a climate of fear around autonomous weapons. So the argument goes, by referring to autonomous weapons as “killer robots” and treating them as a threat to “human” security, campaigners manipulate an unwitting public with robo-apocalyptic metaphors ill-suited to a rational debate about the pace and ethical limitations of emerging technologies.

One of the more specious criticisms of the “stopkillerrobots” campaign is that it is using sensationalist language and imagery to whip up a climate of fear around autonomous weapons. So the argument goes, by referring to autonomous weapons as “killer robots” and treating them as a threat to “human” security, campaigners manipulate an unwitting public with robo-apocalyptic metaphors ill-suited to a rational debate about the pace and ethical limitations of emerging technologies.

For example, in the run-up to the campaign launch last spring Gregory McNeal at Forbes opined:

HRW’s approach to this issue is premised on using scare tactics to simplify and amplify messages when the “legal, moral, and technological issues at stake are highly complex.” The killer robots meme is central to their campaign and their expert analysis.

McNeal is right that the issues are complex, and of course it’s true that in press releases and sound-bytes campaigners articulate this complexity in ways designed to resonate outside of legal and military circles (like all good campaigns do), saving more detailed and nuanced arguments for in-depth reporting. But McNeal’s argument about this being a “scare tactic” only makes sense if people are likelier to feel afraid of autonomous weapons when they are referred to as “killer robots.”

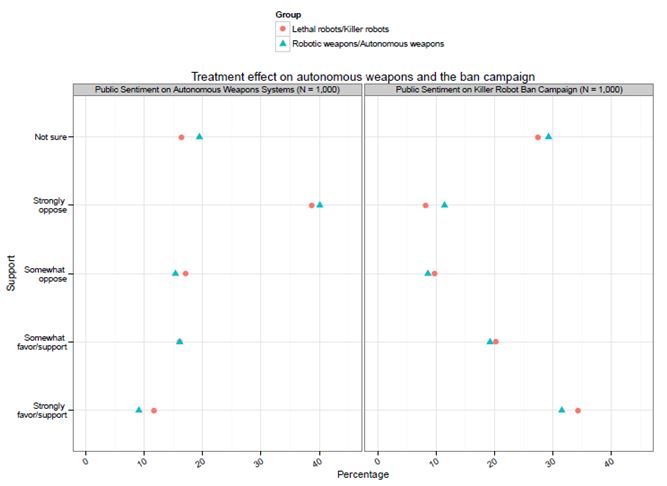

Is that true? To find out, I included a priming question in the survey of public opinion on autonomous weapons I conducted last month through YouGov.com. This is part of a new research project on the relationship between popular culture and human security norms, so I was keen to see whether the campaign’s rhetoric actually exerted this priming effect. 1000 US citizens were asked how they felt about the idea of outsourcing targeting decisions to machines. 500 were asked the first question using dry military jargon and stressing the autonomy of “weapons,” whereas the other 500 were asked about “lethal robots,” invoking the idea of robots as agents, as soldiers rather than weapons. In the second question, 500 were asked about “autonomous weapons” and the other 500 were asked about “killer robots.”

I found almost no effect on citizens’ sentiment based on this varied wording, and what effect we observe was within the margin of error for the survey. As I posted previously, the majority of Americans across all ideologies and demographic groups oppose the development and use of autonomous weapons; nearly 40% are “strongly opposed.” This doesn’t change much at all if you ask them about “lethal robots” versus “robotic weapons.” Moreover, a majority of Americans surveyed support the idea of a ban campaign, and this too doesn’t change if you refer to the campaign as the “Campaign to Stop Killer Robots” versus “a campaign to ban the use of fully autonomous weapons” (question 2).

In short, people are afraid of “killer robots” because of they “killer robots” are scary, not because of the “killer robot” label. It’s true that the term “killer robots” does seem to have an impact on media coverage of the campaign (which I’ll discuss more presently), but the claim that it is designed to frighten or that it does frighten the public appears to be false. NGOs are channeling popular concern about autonomous systems, not manufacturing it.

Charli Carpenter is a Professor in the Department of Political Science at the University of Massachusetts-Amherst. She is the author of 'Innocent Women and Children': Gender, Norms and the Protection of Civilians (Ashgate, 2006), Forgetting Children Born of War: Setting the Human Rights

Agenda in Bosnia and Beyond (Columbia, 2010), and ‘Lost’ Causes: Agenda-Setting in Global Issue Networks and the Shaping of Human Security (Cornell, 2014). Her main research interests include national security ethics, the protection of civilians, the laws of war, global agenda-setting, gender and political violence, humanitarian affairs, the role of information technology in human security, and the gap between intentions and outcomes among advocates of human security.

The more troubling argument is that the label ‘killer robots’ strikes me as undermining the seriousness of the issue. That is, by implicitly bringing to mind ‘terminator’-esque images in the public’s mind the issue can and will be belittled by those with an interest to do so. Why use comic-book like language for such a serious issue? The problem is certainly not that such language is designed to instill fear but, rather, that it might have the opposite effect.

That was part of what I wanted to figure out – two competing hypotheses about what effect this might have, one positive one negative. Actually it turns out not to have much effect either way, at least on this test.

This is really interesting. I wonder what label would trivialize the issue, if not ‘killer robots.’

It seems to me that this critique applies to the NGOs running the campaign as well as those criticizing it. Both sides probably believed that “killer robots” would be a way of swaying opinions AND obtaining publicity. Turns out both seem to be wrong about the prior. So why single out the counter-campaign?

Well two things: one, this isn’t a critique so much as a response to a critique, so the reason I’m singling out the counter-campaign is that they are the ones making a specious critique. And two, on the campaigners’ side my conversations with practitioners in this community suggest the killer robot label was always more about engaging the media, which seems to have worked. (See future blog post.)

It might be sensationalist but it isn’t misleading. That’s more than you can say for most government-led security fear mongering.